Category: Artificial Intelligence

Blog Entry © Thursday, March 27, 2025, by James Pate Williams, Jr., BA, BS, Master of Software Engineering, PhD Lithium (Li, Z = 3) Total Ground-State Energy Numerical Experiments

Revised Helium Ground-State Total Energy Computation (c) Sunday, March 23, 2025, by James Pate Williams, Jr.

Blog Entry (c) Monday, January 20, 2025, by James Pate Williams, Jr. Solution of a Nonlinear Equation Using a Back-Propagation Neural Network

The equation solved is f(x, y, z, u) = x + y * y + z * z * z + u * u * u * u = o.

Blog Entry © Tuesday, January 14, 2025, by James Pate Williams, Jr. Solution of an 8×8 System of Nonlinear Equations Using a Hybrid Algorithm

Blog Entry © Tuesday, January 7 – Thursday, January 9, 2025, by James Pate Williams, Jr. Solution of a System of Nonlinear Equations Using Damped Newton’s Method for a System of Equations

First United Methodist Church (FUMC) of LaGrange Georgia Historical Attendance Data for the Year of 2006 © James Pate Williams, Jr., BA, BS, Master Software Engineering, PhD and FUMC Friday, December 20, 2024, to Tuesday, December 24, 2024

Artificial Neural Network Learns the Exclusive OR (XOR) Function by James Pate Williams, Jr.

The exclusive or (XOR) function is a very well known simple two input binary function in the world of computer architecture. The truth table has four rows with two input columns 00, 01, 10, and 11. The output column is 0, 1, 1, 0. In other words, the XOR function is false for like inputs and true for unlike inputs. This function is also known in the world of cryptography and is the encryption and decryption function for the stream cipher called the onetime pad. The onetime pad is perfectly secure as long as none of the pad is resused. The original artificial feedforward neural network was unable to correctly generate the outputs of the XOR function. This disadvantage was overcome by the addition of back-propagation to the artificial neural network. The outputs must be adjusted to 0.1 and 0.9 for the logistic also known as the sigmoid activation function to work.

using System;

using System.Windows.Forms;

namespace BPNNTestOne

{

public class BPNeuralNetwork

{

private static double RANGE = 0.1;

private double learningRate, momentum, tolerance;

private double[] deltaHidden, deltaOutput, h, o, p;

private double[,] newV, newW, oldV, oldW, v, w, O, T, X;

private int numberHiddenUnits, numberInputUnits;

private int numberOutputUnits, numberTrainingExamples;

private int maxEpoch;

private Random random;

private TextBox tb;

public double[] P

{

get

{

return p;

}

}

double random_range(double x_0, double x_1)

{

double temp;

if (x_0 > x_1)

{

temp = x_0;

x_0 = x_1;

x_1 = temp;

}

return (x_1 - x_0) * random.NextDouble() + x_0;

}

public BPNeuralNetwork

(

double alpha,

double eta,

double threshold,

int nHidden,

int nInput,

int nOutput,

int nTraining,

int nMaxEpoch,

int seed,

TextBox tbox

)

{

int i, j, k;

random = new Random(seed);

learningRate = eta;

momentum = alpha;

tolerance = threshold;

numberHiddenUnits = nHidden;

numberInputUnits = nInput;

numberOutputUnits = nOutput;

numberTrainingExamples = nTraining;

maxEpoch = nMaxEpoch;

tb = tbox;

h = new double[numberHiddenUnits + 1];

o = new double[numberOutputUnits + 1];

p = new double[numberOutputUnits + 1];

h[0] = 1.0;

deltaHidden = new double[numberHiddenUnits + 1];

deltaOutput = new double[numberOutputUnits + 1];

newV = new double[numberHiddenUnits + 1, numberOutputUnits + 1];

oldV = new double[numberHiddenUnits + 1, numberOutputUnits + 1];

v = new double[numberHiddenUnits + 1, numberOutputUnits + 1];

newW = new double[numberInputUnits + 1, numberHiddenUnits + 1];

oldW = new double[numberInputUnits + 1, numberHiddenUnits + 1];

w = new double[numberInputUnits + 1, numberHiddenUnits + 1];

for (j = 0; j <= numberHiddenUnits; j++)

{

for (k = 1; k <= numberOutputUnits; k++)

{

oldV[j, k] = random_range(-RANGE, +RANGE);

v[j, k] = random_range(-RANGE, +RANGE);

}

}

for (i = 0; i <= numberInputUnits; i++)

{

for (j = 0; j <= numberHiddenUnits; j++)

{

oldW[i, j] = random_range(-RANGE, +RANGE);

w[i, j] = random_range(-RANGE, +RANGE);

}

}

O = new double[numberTrainingExamples + 1, numberOutputUnits + 1];

T = new double[numberTrainingExamples + 1, numberInputUnits + 1];

X = new double[numberTrainingExamples + 1, numberInputUnits + 1];

}

public void SetTrainingExample(double[] t, double[] x, int d)

{

int i, k;

for (i = 0; i <= numberInputUnits; i++)

X[d, i] = x[i];

for (k = 0; k <= numberOutputUnits; k++)

T[d, k] = t[k];

}

private double f(double x)

// squashing function = a sigmoid function

{

return 1.0 / (1.0 + Math.Exp(-x));

}

private double g(double x)

// derivative of the squashing function

{

return f(x) * (1.0 - f(x));

}

public void ForwardPass(double[] x)

{

double sum;

int i, j, k;

for (j = 1; j <= numberHiddenUnits; j++)

{

for (i = 0, sum = 0; i <= numberInputUnits; i++)

sum += x[i] * w[i, j];

h[j] = sum;

}

for (k = 1; k <= numberOutputUnits; k++)

{

for (j = 1, sum = h[0] * v[0, k]; j <= numberHiddenUnits; j++)

sum += f(h[j]) * v[j, k];

o[k] = sum;

p[k] = f(o[k]);

}

}

private void BackwardPass(double[] t, double[] x)

{

double sum;

int i, j, k;

for (k = 1; k <= numberOutputUnits; k++)

deltaOutput[k] = (p[k] * (1 - p[k])) * (t[k] - p[k]);

for (k = 1; k <= numberOutputUnits; k++)

{

newV[0, k] = learningRate * h[0] * deltaOutput[k];

for (j = 1; j <= numberHiddenUnits; j++)

newV[j, k] = learningRate * f(h[j]) * deltaOutput[k];

for (j = 0; j <= numberHiddenUnits; j++)

{

v[j, k] += newV[j, k] + momentum * oldV[j, k];

oldV[j, k] = newV[j, k];

}

}

for (j = 1; j <= numberHiddenUnits; j++)

{

for (k = 1, sum = 0; k <= numberOutputUnits; k++)

sum += v[j, k] * deltaOutput[k];

deltaHidden[j] = g(h[j]) * sum;

for (i = 0; i <= numberInputUnits; i++)

{

newW[i, j] = learningRate * x[i] * deltaHidden[j];

w[i, j] += newW[i, j] + momentum * oldW[i, j];

oldW[i, j] = newW[i, j];

}

}

}

public double[,] Backpropagation(bool intermediate, int number)

{

double error = double.MaxValue;

int d, k, epoch = 0;

while (epoch < maxEpoch && error > tolerance)

{

error = 0.0;

for (d = 1; d <= numberTrainingExamples; d++)

{

double[] x = new double[numberInputUnits + 1];

for (int i = 0; i <= numberInputUnits; i++)

x[i] = X[d, i];

ForwardPass(x);

double[] t = new double[numberOutputUnits + 1];

for (int i = 0; i <= numberOutputUnits; i++)

t[i] = T[d, i];

BackwardPass(t, x);

for (k = 1; k <= numberOutputUnits; k++)

{

O[d, k] = p[k];

error += Math.Pow(T[d, k] - O[d, k], 2);

}

}

epoch++;

error /= (numberTrainingExamples * numberOutputUnits);

if (intermediate && epoch % number == 0)

tb.Text += error.ToString("E10") + "\r\n";

}

tb.Text += "\r\n";

tb.Text += "Mean Square Error = " + error.ToString("E10") + "\r\n";

tb.Text += "Epoch = " + epoch + "\r\n";

return O;

}

}

}

// Learn the XOR Function

// Inputs/Targets

// 0 0 / 0

// 0 1 / 1

// 1 0 / 1

// 1 1 / 0

// The logistic function works

// with 0 -> 0.1 and 1 -> 0.9

//

// BPNNTestOne (c) 2011

// James Pate Williams, Jr.

// All rights reserved.

using System;

using System.Windows.Forms;

namespace BPNNTestOne

{

public partial class MainForm : Form

{

private int seed = 512;

private BPNeuralNetwork bpnn;

public MainForm()

{

InitializeComponent();

int numberInputUnits = 2;

int numberOutputUnits = 1;

int numberHiddenUnits = 2;

int numberTrainingExamples = 4;

double[,] X =

{

{1.0, 0.0, 0.0},

{1.0, 0.0, 0.0},

{1.0, 0.0, 1.0},

{1.0, 1.0, 0.0},

{1.0, 1.0, 1.0}

};

double[,] T =

{

{1.0, 0.0},

{1.0, 0.1},

{1.0, 0.9},

{1.0, 0.9},

{1.0, 0.1}

};

bpnn = new BPNeuralNetwork(0.1, 0.9, 1.0e-12,

numberHiddenUnits, numberInputUnits,

numberOutputUnits, numberTrainingExamples,

5000, seed, textBox1);

for (int i = 1; i <= numberTrainingExamples; i++)

{

double[] x = new double[numberInputUnits + 1];

double[] t = new double[numberOutputUnits + 1];

for (int j = 0; j <= numberInputUnits; j++)

x[j] = X[i, j];

for (int j = 0; j <= numberOutputUnits; j++)

t[j] = T[i, j];

bpnn.SetTrainingExample(t, x, i);

}

double[,] O = bpnn.Backpropagation(true, 500);

textBox1.Text += "\r\n";

textBox1.Text += "x0\tx1\tTarget\tOutput\r\n\r\n";

for (int i = 1; i <= numberTrainingExamples; i++)

{

for (int j = 1; j <= numberInputUnits; j++)

textBox1.Text += X[i, j].ToString("##0.#####") + "\t";

for (int j = 1; j <= numberOutputUnits; j++)

{

textBox1.Text += T[i, j].ToString("####0.#####") + "\t";

textBox1.Text += O[i, j].ToString("####0.#####") + "\t";

}

textBox1.Text += "\r\n";

}

}

}

}

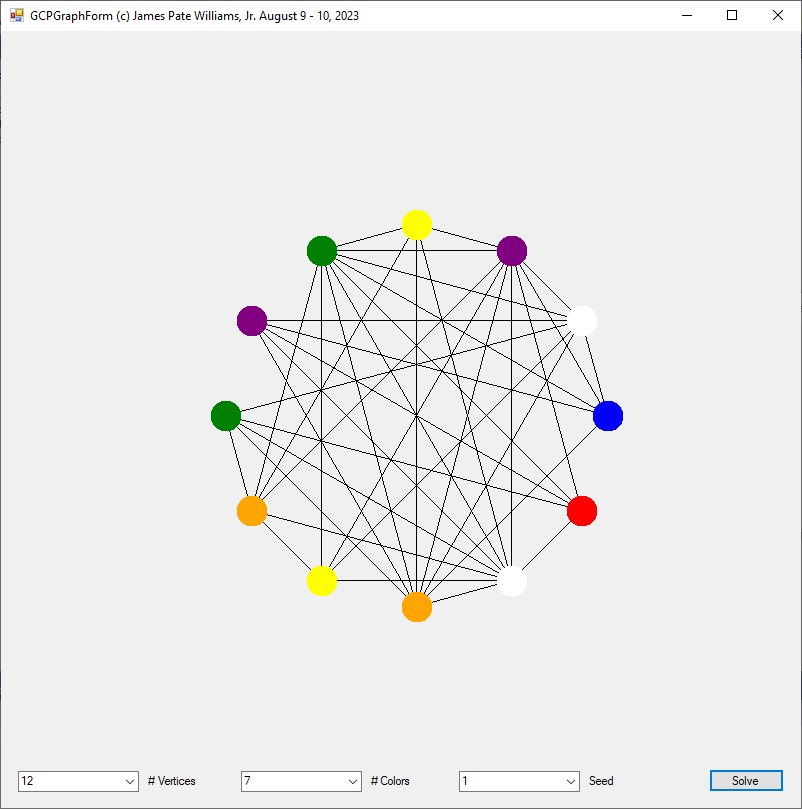

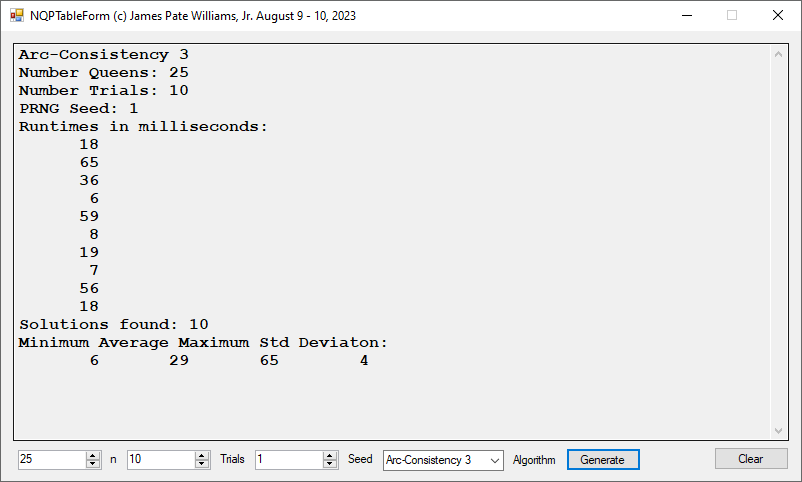

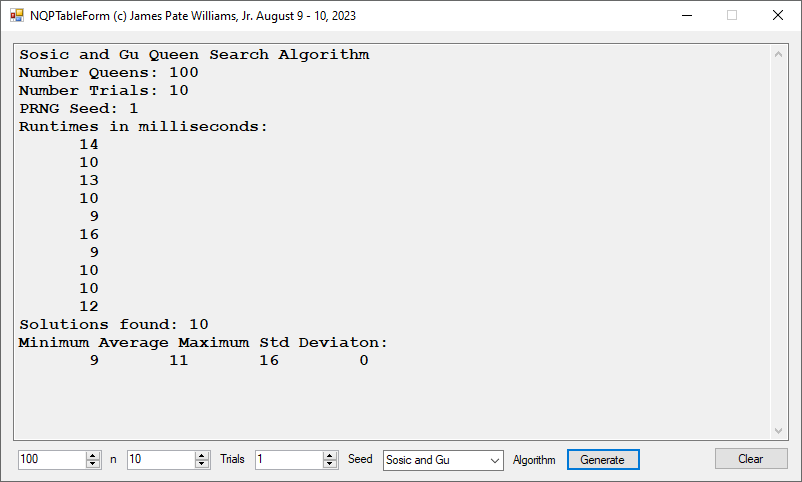

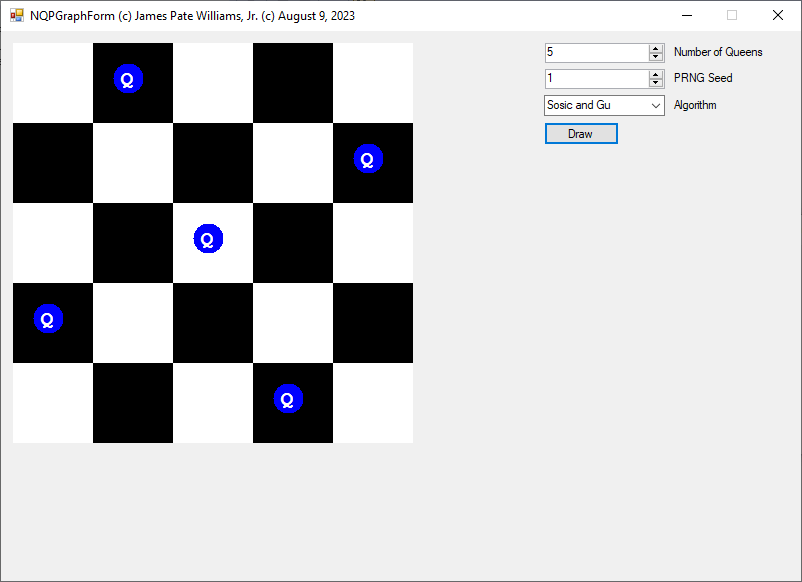

Microsoft C# Constraint Satisfaction Dynamic Link Library (DLL) and Test Application

Constraint Satisfaction DLL Source Code Files Source Code File Lines of Code Arc.cs 42 Common.cs 214 Label.cs 62 NQPArcConsisitencies.cs 148 NQPBacktrack.cs 51 NQPSosicGu.cs 234 Vertex.cs 18 YakooGCPWCSA.cs 160 Total 929 Source Code File Lines of Code GCPGraphForm.cs 173 MainForm.cs 42 NQPGraphForm.cs 137 NQPTableForm.cs 223 Total 575 Source 1. Foundations of Constraint Satisfaction by Edward Tsang